How to Get the Data You Need: Part 2

Organizations with established insider threat detection programs often deploy security solutions that are optimized to perform network log monitoring and aggregation, which makes sense given that these systems excel at identifying anomalous activity outside an employee’s typical routine — such as printing from an unfamiliar printer, accessing sensitive files, emailing a competitor, visiting prohibited websites or inserting a thumb drive without proper authorization.

But sole reliance on anomaly detection using network-focused security tools has several critical drawbacks. First, few organizations have the analytic resources to manage the excessive number of alerts they generate. They also can’t inherently provide any related ground truths that might provide the context to quickly ‘explain away’ the obvious false positives. And they leverage primarily host and network activity data, which doesn’t capture the underlying human behaviors that are the true early indicators of insider risk.

By their very nature, standalone network monitoring systems miss the large trove of insights that can be found in an organization’s non-network data. These additional information sources can include travel and expense records, on-boarding/off-boarding files, job applications and employment histories, incident reports, investigative case data and much more.

One such source that is often overlooked (and thus underutilized) is data from access control systems. Most employees have smart cards or key fobs that identify them and provide access to a building or a room, and their usage tells a richly detailed story of the routines and patterns of each badge-holder. They can also generate distinctive signals when employees deviate from their established norms.

Although not typically analyzed in conventional security analytics systems, badge data is a valuable source of context and insight in Haystax Technology’s Constellation for Insider Threat user behavior analytics (UBA) solution. Constellation ingests a wide array of information sources — badge data included — and analyzes the evidence they contain via an analytics platform that combines a probabilistic model with machine learning and other artificial intelligence techniques.

The Constellation model does the heavy analytical lifting, assessing anomalous behavior against the broader context of ‘whole-person trustworthiness’ to reason whether or not the behavior is indicative of risk. And because the model is a Bayesian inference network, it updates Constellation’s ‘belief’ in an individual’s level of trustworthiness every time new data is applied. The analytic results are displayed as a dynamic risk score for each individual in the system, allowing security analysts and decisionmakers to pinpoint their highest-priority risks.

In some cases, the badge data is applied directly to specific model nodes. In other cases, Haystax implements detectors that calculate the ‘unusualness’ of each new access event against a profile of overall access; only when an access event exceeds a certain threshold is it applied as evidence to the model. (We also consider the date the access event occurs, so that events which occurred long ago have a smaller impact than recent events. This so-called temporal decay is accomplished via a ‘relevance half-life’ function for each type of event.)

Besides the identity of the user, the time-stamp of the badge event is the minimum information required in order to glean insights from badge data. If an employee typically arrives around 9:00 AM each workday and leaves at 5:30 PM, then badging in at 6:00 AM on a Sunday will trigger an anomalous event. However, if the employee shows no other signs of adverse or questionable behavior, Constellation will of course note the anomaly but ‘reason’ that this behavior alone is not a significant event — one of the many ways it filters out the false positives that so often overwhelm analysts. The employee’s profile might even contain mitigating information that proves the early weekend hour was the result, say, of a new project assignment with a tight deadline. And the anomaly could be placed into further context with the use of another Constellation capability called peer-group analysis, which compares like individuals’ behaviors with each other rather than comparing one employee to the workforce at large.

But badge time-stamps tell only a small part of the story.

Now let’s look at insights that can be gleaned from other kinds of badge data.

Consider the case of Kara, a mid-level IT systems administrator employed at a large organization. Kara has privileged access and also a few anomalous badge times, so the Constellation ‘events’ generated from her badge data are a combination of [AccessAuthorized] and [UnusualAccessAuthorizedTime] (all events are displayed in green). But because Kara’s anomalous times are similar to those of her peers, nothing in her badge data significantly impacts her overall risk score in Constellation.

Kara’s employer uses a badge logging system that includes not just access times but also unsuccessful access attempts (aka, rejections). With this additional information, we find that Kara has significantly more access rejection events — [BadgeError] and [UnusualBadgeErrorTime] — than her peers, which implies that she is attempting to access areas she is not authorized to enter. Because there are other perfectly reasonable explanations for this behavior, we apply these anomalies as weak evidence to the [AccessesFacilityUnauthorized] model node (all nodes are displayed in red). And Constellation imposes a decay half-life of 14 days on these anomalous events, meaning that after two weeks their effect will be reduced by half.

Now let’s say that the employer’s badge system also logs the reason for the access rejection. For example, a pattern of lost or expired badges — [ExcessiveBadgeErrorLostOrExpired] — could imply that Kara is careless. Because losing or failing to renew a badge is a more serious indicator — even if there are other explanations — we would apply this as medium-strength evidence to the model node [CarelessTowardDuties] with a decay half-life of 14 days. If the error type indicates an insufficient clearance for entering the area in question, we can infer that Kara is attempting access above her authorized level [BadgeErrorInsuffClearance]. Additionally, a series of lost badge events could be applied as negative evidence to the [Conscientious] model node.

A consistent pattern of insufficient clearance errors [Excessive/UnusualBadgeErrorInsuffClearance] would be applied as strong evidence to the node [AccessesFacilityUnauthorized] with a longer decay half-life of 30 days to reflect the increased seriousness of this type of error (see image below). If the error indicates an infraction of security rules, we can infer that Kara is disregarding her employer’s security regulations, and a pattern of this behavior would be applied as strong evidence to the model node [NeglectsSecurityRules] with a decay half-life of 60 days.

Finally, let’s say Kara’s employer makes the ‘Door Name’ field available to Constellation. This not only enables us to detect location anomalies — [UnusualAccessAuthorizedLocation] and [UnusualBadgeErrorLocation] — in addition to time anomalies, but now the Constellation model can infer something about the area being accessed. For example, door names that include keywords like ‘Security,’ ‘Investigations’ or ‘Restricted’ are categorized as sensitive areas. Those with keywords like ‘Lobby’, ‘Elevator’ or ‘Garage’ are classified as common areas. Recreational areas are indicated by names such as ‘Break Room’, ‘Gym’ and ‘Cafeteria.’

This additional information gives us finer granularity in generating badge events. An anomalous event from a common area [UnusualCommonAreaAccessAuthorizedTime/Location] is much less significant than one from a sensitive area [UnusualSensitiveAreaAccessAuthorizedTime/Location], which we would apply to the model node [AccessesFacilityUnauthorized] as strong evidence with a decay half-life of 60 days. Combining this information with the error type gives us greater accuracy, and therefore stronger evidence; a pattern of clearance errors when Kara attempts to gain access to a sensitive area [UnusualBadgeErrorInsuffClearanceSensitiveAreaTime] is of much greater concern than a time anomaly for a common area [UnusualAccessAuthorizedCommonAreaTime]. If the data field for number of attempts is available, we can infer even stronger evidence: if Kara has tried to enter a sensitive area for which she has an insufficient clearance five times within one minute, we clearly have a problem.

There are even deeper insights to be gleaned from badge data. For example:

- We could infer that Kara is [Disgruntled] if she is spending more time in recreational areas than her peers.

- Similarly, if Kara is spending less time in recreational areas than her peers, we could infer that she is [UnderWorkStress].

- In some facilities, accessing the roof might even indicate a threat to oneself.

Finally, consider a scenario in which an individual has several unusual events that seem innocuous on their own, but when combined indicate a concerning behavior. If within a short timeframe Kara accesses a new building [UnusualBadgeAccessLocation] at an unusual time [UnusualBadgeAccessTime] and prints a large number of pages [UnusualPrintVolume] from a printer she has never used before [UnusualPrintLocation], a purely badge-focused or network-focused monitoring system will generate a succession of isolated alerts in a sea of them — while potentially missing the larger and more troubling picture that could have been gleaned by ‘connecting the dots.’

The Constellation model, by contrast, is designed to give events more importance when combined with other events and detected sequences of events. This combination of events would significantly impact Kara’s score (see image below), and an insider threat analyst would see the score change displayed automatically as an incident in Constellation and be able to conduct a deeper investigation.

Decades of research studies and experience gained from real-world insider threat events have strongly demonstrated that malicious, negligent and inadvertent insiders alike all exhibit adverse attitudes and behaviors sometimes months or even years in advance of the actual event.

Badge data, like network data, won’t tell the whole story on its own. But it can deliver critical insights not available anywhere else. And when its component pieces are analyzed and blended with data from other sources — for example evidence of professional, personal or financial stress — the result is contextualized, actionable insider-threat intelligence. It’s a user behavior analytics approach that focuses on the user, not the network or the device.

# # #

Julie Ard is the Director of Insider Threat Operations at Haystax Technology, a Fishtech Group company.

NOTE: For more information on Constellation’s ‘whole-person’ approach to user behavior analytics, download our in-depth report, To Catch an IP Thief.

More than Awareness: What We've Learned

Today is the first day of National Cybersecurity Awareness Month, an appropriate occasion to review some of the most important lessons that industry organizations should have learned as they strive to maintain a holistic cyber-risk mitigation program that their leadership can trust. Based on more than two decades of shaping the landscape of cybersecurity solutions, here are a few of the consensus insights we have gleaned.

Cybersecurity requires more than a nod of approval from the C-suite.

A bottom-up approach to cybersecurity, often originating in the IT security shop, is common. But securing C-suite buy-in after the fact can be a struggle. In today’s environment where a robust cybersecurity risk management program is essential to the ongoing viability of an organization, the drivers must be at the top. Moreover, cybersecurity must be a consensus priority for all elements of leadership, starting with the CEO but vitally including the CIO, CISO, CTO, CFO and the top legal and risk-management leaders in the organization. And because a holistic approach involves people and process as well (see below for more on that), HR must be on board. Since a cyber attack can impact the organization’s systems, finances, people, facilities and even reputation, it really is a matter of all C-suite hands on deck.

Cyber-hygiene is not enough.

Most cybersecurity efforts have focused on cyber ‘hygiene’ through compliance with a set of recommended but unenforceable standards. Rather than checking boxes, however, what’s really needed is a holistic risk framework that is much more analytically sound and scientifically grounded — in other words, a solid commitment to risk-based assessments and responses — in order to accurately understand and prevent the most serious cybersecurity threats. Security teams should ask important questions like “which threats are most likely to occur?” and “what are our greatest vulnerabilities?” Translating these into business terms is key, and measuring them so that risks and countermeasures can be prioritized is essential.

Network data is not enough.

Many organizations are still trying to detect their biggest external and internal threats based mainly on network logging and aggregation. But the fact is that the earliest indicators of such threats lie in human actions and attitudes. Thus, security teams that are proactive and focused on data-driven cybersecurity need to find ways of bringing in more unstructured data from unconventional sources that will reveal behaviors well in advance of an actual event. After all, you can’t discipline or fire an end-point.

Technology alone is not the solution.

To hear many security vendors tell it, they’ve already developed the perfect all-encompassing cybersecurity solution. The reality, however, is that a truly holistic cyber-risk management program requires a well-thought-out and coordinated set of protocols and routines that encompass people, process and technology. No tool on its own will protect an organization if the server room door is left unlocked, or if the staff isn’t vigilant to avoid clicking on a suspicious email. Continuous training and education, along with easily understood policies implemented from the top down with no exceptions, are just as critical as the best firewall or SIEM tool.

How to Get the Data You Need

Enterprise security teams responsible for preventing insider threats have mixed feelings about acquiring and analyzing internal data. Sure, that data contains a wealth of knowledge about the potential for risk from trusted employees, contractors, vendors and customers. But it also comes with a mountain of legal and organizational headaches, can be contradictory and often generates more questions than answers. No wonder most security programs prefer to rely on monitoring network logs.

But there’s a more methodical way for organizations to approach data acquisition and analysis: before diving into the arduous task of trying to work with the data theyhave, it’s better to first ask what problems they want to solve — and let the answers guide them down the path of obtaining the data they need.

One effective mechanism for carrying out this sequence is to build a model of the problem domain and then go find relevant data to apply to it. At Haystax, we collaborate with diverse subject-matter experts to build probabilistic models known as Bayesian inference networks, which excel at assessing probabilities in complex problem domains where the data is incomplete or even contradictory.

Our user behavior analytics (UBA) model, for example, was developed to detect individuals who show an inclination to commit or abet a variety of malicious insider acts, including: leaving a firm or agency with stolen files or selling the information illegally; committing fraud; sabotaging an organization’s reputation, IT systems or facilities; and committing acts of workplace violence or self-harm. It also can identify indicators of willful negligence (rule flouting, careless attitudes to security, etc.) and unwitting or accidental behavior (human error, fatigue, substance abuse, etc.) that could jeopardize an organization’s security.

The UBA model starts with a top-level hypothesis that an individual is trustworthy, followed by high-level concepts relating to personal trustworthiness such as reliability, stability, credibility and conscientiousness. It then breaks these concepts down into smaller and smaller sub-concepts until they become causal indicators that are measurable in data. Finally, it captures not only the relationships between each concept, but also the relative strength of each relationship.

Sitting at the core of Haystax’s Constellation Analytics Platform, this UBA model provides the structure our customers need to: 1) pinpoint which of their data sets can be most usefully applied to the model; 2) identify any critical data gaps they may have; and 3) ignore data that’s unlikely to be useful. Most importantly, it enables security teams to assess workplace risk in a holistic and predictive way as the individual’s adverse behaviors are starting to manifest themselves — rather than after a major adverse event has taken place.

Data relevant to insider threat mitigation can be categorized as financial, professional, legal and personal. Within these categories are two main data types: static and dynamic:

- Static data is typically used for identifying major life events, and can establish a baseline for what ‘normal’ behavior looks like for that individual. This type of data isn’t updated frequently, so there may be longer periods of time with no new information.

- Dynamic data is updated on the order of hours or days and is the source of detection for smaller, less obvious life and behavioral changes in an individual. For example, there may be a record of marriage (large life event; static data) and a recent vacation for two (smaller life event; dynamic data) indicating a healthy and stable home life.

Ideally, organizations have some of each data type to establish baselines and then maintain day-to-day situational awareness.

Another important part of the data identification and acquisition process is accessibility. There are three levels of data accessibility to consider: public, organizational or protected/private:

- Public data is readily available from open external sources.

- Organizational data is managed internally by a company or government agency and can be obtained if a compelling case is made.

- Protected/private data is mostly controlled by individuals or third-party entities and is difficult to access without their consent.

The table below contains a detailed list of data sources broken down by category and accessibility level, and by whether it’s static or dynamic.

UBA industry analysts at Gartner have observed that incorporating unstructured information like performance appraisals, travel records and social media posts “can be extremely useful in helping discover and score risky user behavior,” because it provides far better context than structured data from networks and the like. (And with more and more network data being encrypted, pulling threat signals from network logs is in any case becoming increasingly challenging.)

There are dozens of behavioral indicators for which supporting data is available or obtainable, and which can be readily ingested, augmented, applied and analyzed within the Constellation UBA solution. Take the case of a senior-level insider who intends to steal a large volume of his company’s intellectual property (IP). An early risk indicator is that he comes into the office at an odd time (badge records), accesses a file directory he is normally not privy to (network data) and prints out a large document (printer logs). This activity alone would not trigger an alert in Constellation, as it could be that he was assigned a new project with a tight deadline by a different department.

But then data is obtained which reveals that he is experiencing financial or personal stress (public bankruptcy/divorce records), leading to degraded work performance (poor supervisor reviews) and several tense confrontations with colleagues (staff complaints), all of which will elevate him in Constellation to a moderate risk. Finally, he is caught posting a derisive rant about the company on social media (public data) and either contacting a competitor (email/phone logs) or booking a one-way ticket to another country (travel records). This activity elevates him to high-risk status in Constellation and he is put on a watch list, so that when rumors spread of pending departmental layoffs (HR plans) and the company detects him downloading large files to a thumb drive (DLP alert), the company’s security team is ready to act.

October is National Cybersecurity Awareness Month in the US. In the months leading up to it, technology and security experts have increasingly come to the consensus view that while insider threats constitute one of the fastest-growing risks to the IT and physical security environments, organizations don’t have the analytical tools — or the data —to pinpoint their biggest threats in a timely way.

The reality is that most organizations today still try to detect their insider threats by analyzing log aggregation files, and not much else. Because they invariably end up with an excessive number of false positives and redundant alerts, their analysts often feel overwhelmed trying to triage their cases and waste precious time chasing down contextual information to verify what’s real and what is not.

By contrast, Haystax’s approach with its Constellation UBA solution is to apply a much larger volume and variety of data to a probabilistic behavioral risk model, which then continuously updates its ‘belief’ that each employee is trustworthy (or not). With Constellation — and a broader array of data sources — a security team can perform true cyber-risk management, avoiding alert overload and focusing instead on quickly and proactively identifying those individuals who are poised to do the most harm to the enterprise.

Hannah Hein is Insider Threat Project Manager at Haystax Technology.

How to Mitigate Insider Threat

The best insider threat mitigation programs often use combinations of analytic techniques to assess and prioritize workforce risk, according to a recent report by the Intelligence and National Security Alliance (INSA). For example, probabilistic models can be usefully enhanced with rules-based triggers and machine learning algorithms that detect anomalies, creating a powerful user behavior analytics (UBA) capability for government and private enterprises alike.

Haystax Technology’s Vice President for UBA Customer Success, Tom Read, was a key member of INSA’s Insider Threat Subcommittee, which produced the report, and he recently summarized its findings in an article for Homeland Security Today.

“Organizations confronting malicious, negligent and unintentional threats from their trusted insiders must make important policy, structural and procedural decisions as they stand up programs to mitigate these burgeoning threats,” Read noted. “On top of that, they must choose from a bewildering array of insider threat detection and prevention solutions.”

INSA’s report, An Assessment of Data Analytics Techniques for Insider Threat Programs, provides a framework to help government and industry decision-makers evaluate the merits of different analytic techniques. Six primary techniques are identified, Read says, along with detailed explanations of each and guidance on how insider threat program managers can determine the types of tools that would most benefit their organizations. The techniques are: rules-based engines; correlation and regression statistics; Bayesian inference networks; machine learning (supervised); machine learning (unsupervised); and cognitive and deep learning.

Read summarized the report’s assessment of each technique in greater detail, as well as its four primary conclusions — that insider threat program managers should:

- Integrate data analytics into the risk management methodology they use to rationalize decision-making;

- Assess which techniques are likely to be most effective given the available data, their organizational culture and their levels of risk tolerance;

- Evaluate the myriad software tools available that most effectively evaluate data using the preferred approach; and

- Assess the human and financial resources needed to launch a data analytics program.

Click here to read the full Homeland Security Today article.

Fishtech Group Completes Acquisition of Haystax

Cybersecurity firm’s capabilities include UBA and Public Safety and Security

Kansas City, MO (July 13, 2018) — Fishtech Group (FTG) has completed its acquisition of Haystax (previously Haystax Technology), an advanced security analytics and risk management solutions provider, as a wholly owned entity under the FTG umbrella. The acquisition extends the cybersecurity firm’s capabilities in new markets including User Behavior Analytics (UBA) and Public Safety and Security. Fishtech is investing heavily in taking the new venture to market in the commercial enterprise space.

Announced in May, the acquisition solidifies the cybersecurity solutions firm’s relationship with Haystax, which had been a Fishtech Venture Group partner since 2016.

The new entity furthers Fishtech’s mission of data-driven security solutions while extending Haystax’s customer reach beyond its roots in homeland security and public safety. Gary Fish, CEO and Founder of Fishtech, serves as CEO and Pete Shah will be Chief Operations Officer. Haystax will retain its base in McLean, Virginia.

“This is a 1+1=3 scenario in that our combined capabilities are so far beyond what either entity could accomplish alone,” said Fish. “We’re expanding the sales force and the brand message to a national scale. We’re already gaining traction into enterprise accounts nationally.”

Haystax’s Constellation analytics platform flexes to and delivers a wide array of advanced security analytics and risk management solutions that enable rapid understanding and response to virtually any type of cyber or physical threats. Based on a patented model-driven approach that applies multiple artificial intelligence techniques, it reasons like a team of expert analysts to detect complex threats and prioritize risks in real time at scale for more effective protection of critical systems, data, facilities and people.

“The Constellation platform has proven itself to be a versatile and effective platform for insider threat, security operation center (SOC) automation, and public safety,” said Fish. “We look forward to working closely with the Haystax team to further enhance those capabilities and develop other applications for its use.”

About Haystax

Founded in 2012, Haystax is a leading security analytics platform provider based in McLean, Virginia.

About Fishtech Group

Fishtech delivers operational efficiencies and improved security posture for its clients through cloud-focused, data-driven solutions. Fishtech is based in Kansas City, Missouri. Visit https://fishtech.group/ or contact us at info@fishtech.group.

SOAR Above Threats to your Organization

If you’ve ever questioned whether your SOC team is missing things or your MSSP is keeping up, you’re not alone. That’s why SOAR (Security Orchestration Automation and Response) is gaining popularity fast.

It’s an easy way to do more with less.

“Our experts deliver a force multiplier for your overworked analyst teams,” says Fishtech CISO Eric Foster.

McLean cyber company whose tech is used by the NFL is being acquired

By Robert J. Terry – Senior Staff Reporter, Washington Business Journal

Key story highlights:

- Fishtech Group is buying McLean-based Haystax Technology and will take the company to market in the commercial enterprise space.

- “People spend a lot of time and money securing their data but they’re not using their data to drive security,” said CEO Gary Fish.

- Haystax’s security analytics platform has been used by the U.S. Department of Defense and the National Football League.

A Kansas City holding company’s deal to acquire McLean-based Haystax Technology is a bid to bring advanced analytics and automation to commercial and government cybersecurity markets.

Fishtech Group plans to make Haystax a wholly owned subsidiary and invest heavily in taking the new venture to market in the commercial enterprise space, particularly heavily regulated industries such as finance and health care. It’s also planning to enhance Haystax’s presence in federal, state and local government markets beyond its roots in homeland security and public safety.

Haystax’s security analytics platform has been used by the U.S. Department of Defense to help track terrorists. The National Football League used the platform to monitor suspicious activity and prevent cyber and physical threats at the Super Bowl. And the state of California used the technology to crunch data from earthquake sensors to predict when earthquakes might occur.

Fishtech CEO Gary Fish said the Haystax mission fits well with his company’s push to use big data, sophisticated analytics, artificial intelligence and machine learning to disrupt the security integration market, where the big vendors are focused on-premises and in networks with well-defined perimeters. In an age of cloud computing and thousands of mobile endpoints, there’s no longer really a perimeter for an organization’s data, but there are reams of insights in that data to enhance an overall security posture.

“People spend a lot of time and money securing their data but they’re not using their data to drive security. If you look in the data you can find what’s causing your security issues potentially,” Fish told me Wednesday. “Haystax brings in large amounts of log data and system data and personnel data and by letting algorithms, the machine learning, the artificial intelligence, by letting the machines sift through this they can see much more than a human could possibly find in the data.”

The two companies know each other well. Fishtech invested $4 million in Haystax in December 2016. When it came time to consider additional investment, Fish decided the better course was to acquire the company, which has about $10 million in annual revenue. He wouldn’t disclose the transaction’s terms but said he expects Haystax to grow 30 percent to 40 percent annually and continue to add to its 50-employee headcount.

Fish will be CEO of Haystax while Pete Shah, the former chief revenue officer, will become COO.

Haystax was launched in 2013 when private equity firm Edgewater Funds acquired two Northern Virginia companies, Digital Sandbox Inc. and FlexPoint Technology LLC, and set out creating a cyber platform for defense, intelligence agency and commercial clients. Edgewater had linked up the year before with Bill Van Vleet, a cyber exec with ties both here and in California, to build a next-generation intelligence company. Van Vleet was previously CEO of Applied Signal Technology Inc., which he sold to Raytheon for approximately $500 million.

Fish said Edgewater will stay on as a minority owner.

Last month, two local cyber startups unveiled Series B venture funding rounds of $20 million or more on successive days, underscoring the market opportunity at a time of increasingly complex threats by well-organized nefarious actors, often state-backed. Upstarts maintain the established players can’t address cyber threats well or don’t measure up to what customers want — but it’s also, critics say, ushered in a lot of the noise in the market courtesy of companies that are long on marketing but short on technology that does what it says it does.

Who Gets In | Microsoft Conditional Access

The question of controlling access to an organization’s data and systems comes up often in our conversations with customers. Recently we had another customer ask how to ensure that devices being used to access their cloud environment met their security requirements.

While there are several ways to address that issue, one of the technical solutions we did some digging on was Microsoft’s Conditional Access solution that is part of Azure AD and Intune.

What follows below are some of the key findings we uncovered in configuring Microsoft Conditional Access via Intune.

Licensing Model(s)

While there are several ways to gain access to Conditional Access functionality within the Microsoft cloud platform, we decided to go down the Intune route.

This is because we wanted to be able to apply access policies based on different aspects of the device which in the Microsoft world means Intune is needed.

In addition, Intune provides ways to manage other aspects of both mobile and laptop/desktop devices which is a bonus.

So in order to get the functionality of Intune and Conditional Access you will need to look at Microsoft’s Enterprise Mobility + Security (EMS) offerings. The licensing models break down like this:

- EMS E3

- Intune

- Azure AD Premium P1

- Azure Information Protection P1

- Advanced Threat Analytics

- EMS E5

- Intune

- Azure AD Premium P2

- Azure Information Protection P2

- Advanced Threat Analytics

- Cloud App Security

- Azure Advanced Threat Protection

For the purposes of this article the minimum level needed is EMS E3 which adds Conditional Access functionality. Not to be confusing (yeah right), but in order to get ‘Risk-based Conditional Access’ the top level EMS E5 subscription is needed.

The primary difference between Conditional Access and Risk-based Conditional Access is that Conditional Access allows policies to be built based on User/Location, Device, and Application criteria while Risk-based Conditional Access adds Microsoft’s Intelligent Security Graph and machine learning to create a more dynamic risk profile to control access.

A final point about licensing. It is complex. Get your Microsoft contact involved to help figure out the licensing details for specific situations because there are many paths to the same destination.

Setting up Conditional Access

In order to configure a Conditional Access policy, there are a few pre-requisites that need to be configured. The specifics of configuring those pre-requisites is beyond the scope of this article, but the broad strokes are that you have properly set up and configured your azure account, Azure AD, and Intune.

This includes ensuring you have some Azure AD users and groups defined that policies can be applied to. Again, this is an oversimplification so my apologies if I left major pieces out.

Now we move into actually creating a Conditional Access policy. Fair warning, I will not cover every possible scenario. Instead, I will try to convey the major concepts and features of a Conditional Access policy as well as any gotchas I came across.

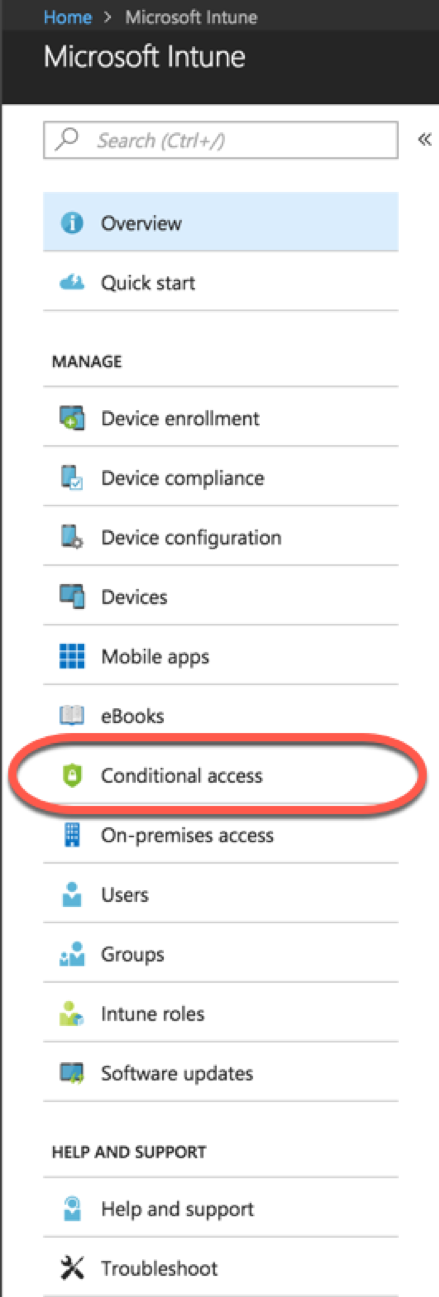

Open up your Azure portal and navigate to Intune>Conditional Access

Note: You can also find Conditional Access by navigating to Azure Active Directory>Conditional Access. In fact, this may be the better route to go because this path exposes additional options that you won’t find by navigating there via Intune.

For example, in the Azure AD Conditional Access portal you can create custom controls using JSON. You can also define named locations, VPN connectivity, terms of service.

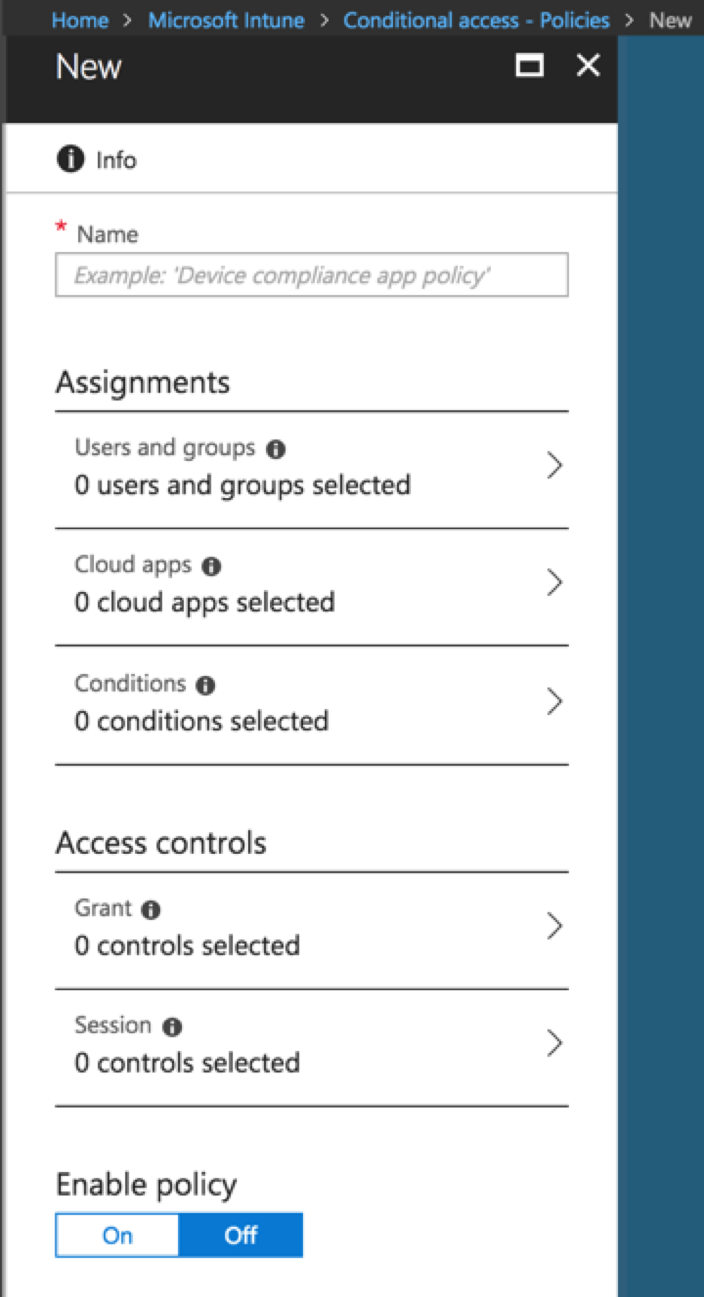

In the right-hand pane, click + New Policy and a new policy window will open.

The two main sections of a Conditional Access policy are ‘Assignments’ and ‘Access Controls’

Assignments:

- Users and Groups

- Select the users and/or groups the policy will be applied to.

- Cloud Apps

- Select the Cloud Apps to be covered by the policy. O386 apps are available by default. Others can be added as needed (beyond the scope of this article)

- Conditions

- The conditions under which the policy will apply.

- The four condition areas are

- sign-in risk (Requires the EMS E5 plan I believe)

- Device platforms (ios, windows, android, etc.)

- Locations (requires configuring locations in Azure Portal)

- Client Apps (browser or client app)

Access Controls

- Grant (block or grant access based on additional criteria)

- Require multi-factor authentication

- Require device to be marked as compliant (more on this below)

- Require domain joined

- Require approved client app

- Session

- App enforced (in preview release)

- Proxy enforced (in preview release)

Once these sections have been completed the policy just needs to be enabled to take effect.

A word about Device Compliance

In order to select the ‘Require device to be marked as compliant’ option in the Grant section of an Access Control policy, you will need to create a Device compliance policy (or several depending on your needs). Device Compliance policies are pretty much what they sound like.

A set rules that indicate whether a managed device complies with your organization’s requirements. Depending on whether the device in question does or does not meet the compliance policy, it will be marked as either ‘Compliant’ or ‘Not Compliant’.

For a device compliance policy to work on a given device, it must be managed by Intune. It is beyond the scope of this article to get into the details of how devices are enrolled and managed in Intune, but at a high-level Intune can manage both personally owned and corporate owned devices.

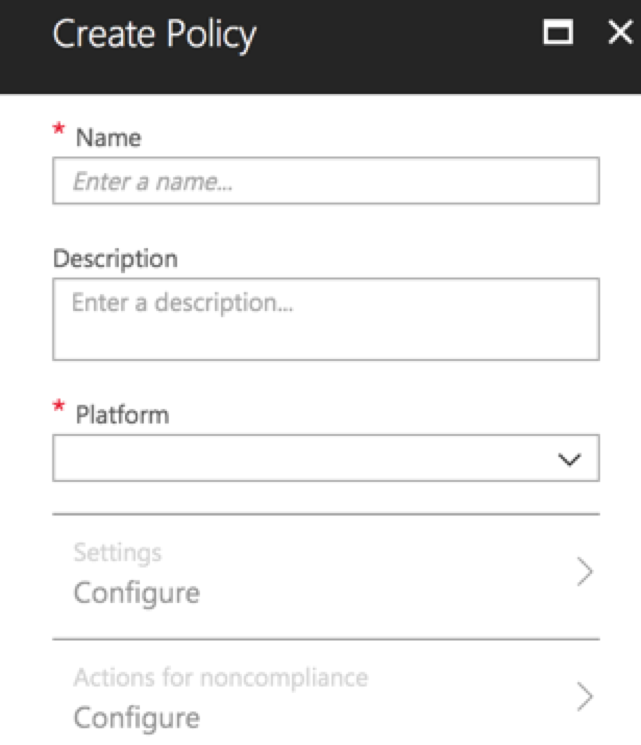

To create a Device Compliance policy open up your Azure portal and navigate to Intune>Device Compliance >Policies and click “+ Create Policy”.

After entering a Name and selecting a platform to apply the policy to, click on Settings>Configure.

After entering a Name and selecting a platform to apply the policy to, click on Settings>Configure.

The policy settings have three categories of configuration. Note: The number of options under each category will change based on the OS platform that was selected.

- Device Health (settings vary based on OS. Examples for Win10 include Require BitLocker and Require code integrity)

- Device Properties (settings vary based on OS. Examples for Win10 include Minimum OS version and Maximum OS version)

- System Security (settings vary based on OS. Examples for Win10 include Require a password to unlock device and block simple passwords)

Once the policy is created, it must be assigned. Click on ‘Assignments’ and choose either a group or ‘All Users’. Warning, it is not recommended to apply policies to all users as there could be unintended consequences. The main point is to know who you are applying policies to so as to minimize surprises.

Now that a compliance policy has been applied it will require a check-in by the targeted user’s device(s) so that the device can run the compliance scan and return a compliant/not compliant result. This compliance assessment can then be used in a Conditional Access policy as described in the previous section.

Final thoughts

In wrapping all of this up there are a few things to keep in mind. First, the licensing aspect of Intune/Conditional Access is unclear from a non-employee standpoint. So for anyone looking to use Microsoft’s Conditional Access to address non-employee scenarios, further digging will be required.

Second, while it may seem mind-numbingly obvious, organizations that are already invested in Azure AD and O365 should take a serious look at Microsoft’s Conditional Access. With the proliferation of security tools in the market, it is sadly true that many organizations continue purchasing more tools when they may already have an adequate solution in hand.

In addition, Microsoft is taking cloud security very seriously and from what I can tell will continue to invest in improving their security solutions.

Related Links:

- Intune/EMS Subscription Levels

- Microsoft Conditional Access

- Integrating Threat Defense to Intune for compliance policies

CYDERES Announces Availability of EMDR Service

KANSAS CITY, Mo., April 6, 2018 /PRNewswire/ — CYDERES, the security-as-a-service division of Fishtech Group, today announced the availability of CYDERES EMDR, its Managed Detection and Response (MDR) service that helps organizations with detection, investigation, remediation, and proactive hunting of threats.

As security breaches continue to escalate in pace and scope, CYDERES EMDR is a human-led, machine-driven managed detection and response service that integrates security tools with exceptional experts to drive automated outcomes via a proprietary platform. CYDERES supplies the people, process, and technology to help organizations manage cybersecurity risks, detect threats, and respond to security incidents in real-time.

“CYDERES answers a problem that wasn’t being solved – how organizations can defend their data and stay focused on their core business,” said Fishtech CEO and Founder Gary Fish. “Our proprietary platform is revolutionary in the way it gathers disparate data together for effective, real-time threat detection.”

The CYDERES EMDR offering combines threat detection, investigation, remediation, and proactive threat hunting through:

- Technology independence

We believe organizations must be free to select the right security solutions for their needs. The CYDERES platform drives automated outcomes and real-time interdiction leveraging those technologies, without requiring a specific set of security tools or locking you in to a proprietary SIEM. - Coverage across modern hybrid, private and public cloud, plus on-premise environments

Our team includes experienced SecDevOps experts and Certified Cloud Security Professionals who can help secure on-premise environments as well as a migration to the cloud. - Action-oriented

We don’t route alerts. A lot of MSSPs create more work for their customers. We’re here to serve as a true force multiplier to your team and drive real-time interdiction of threats.

“Organizations are under constant attack and prevention alone is failing,” said Fishtech CISO Eric Foster, who leads the CYDERES team. “Cybersecurity today requires actively detecting threats and responding in real time. While that’s not feasible for most organizations, we are committed to offering legendary service at a fair price to enable organizations to meaningfully improve their detection and response capabilities.”

In March, Fishtech broke ground on its 20,000 square foot Cyber Defense Center, which will house the CYDERES team. Fish and Foster will demo the CYDERES platform during the upcoming 2018 RSA Conference.

About Fishtech Group

Fishtech delivers operational efficiencies and improved security posture for its clients through cloud-focused, data-driven solutions.